Hands-On 5: Tune the temporary storage locations (CAS Disk cache)

In this hands-on, we will see how to change the default CAS Disk cache location (emptyDir). Instead we will configure the CAS Disk Cache location to point to a specific host directory. In a customer scenario, this directory would point to a high performance storage system location (for example in the Cloud, it could be the path of the Cloud instance NVME/SSD temporary drive or the mountpoint of a RAID stack of multiple SSD Drives). (Tip #1)

Objectives of the hands-on

-

Create the directories for the CAS_DISK_CACHE on each Kubernetes node used for CAS pods.

- Create a patchTransformer manifest to:

- mount the directories to the CAS pods.

- configure CAS to use the new CAS_DISK_CACHE location.

- Re-Run the deployment playbook to pick up and implement the configuration changes.

Step-by-step instructions

Create a directory on the CAS node(s) for the CAS disk cache.

-

The kubectl debug command can be used to gain access to the underlying node’s shell (the debugging Pod can access the root filesystem of the Node, mounted at

/hostin the Pod). -

We will use the debug command to create the

cdc-defaultdirectory on the CAS node (note that is it is a shortcut for this exercise, in real projects, changes on the underlying nodes are usually done in more repetable and automated way.). -

Open the Root Terminal

.

. -

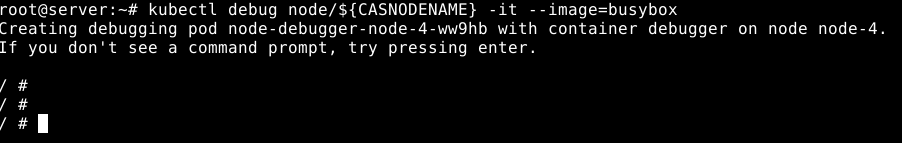

Run the following command (that will start a debug pod):

# in our environment there is only 1 CAS node (SMP) CASNODENAME=node-4 kubectl debug node/${CASNODENAME} -it --image=server.demo.sas.com:5000/busyboxThis command will open an interactive session on the node where we run the CAS pod.

You will see something like this:

-

Once you are connected you can run the following commands to create the directory, set the permissions, and check the result.

mkdir -p / host/cdc-default chmod 777 /host/cdc-default ls -l /host | grep cdcYou should see:

drwxrwxrwx 2 root root 4096 Jan 29 11:46 cdc-default -

DO NOT FORGET to exit from the debug pod, by typing:

exit

Create the PatchTransformer for the CAS Disk cache configuration.

- Now that the directory exist on the CAS node, we need to create a “PatchTransformer” manifest that :

- adds this new hostPath volume and associated volumeMount

- and also set the CASENV_CAS_DISK_CACHE environment variable so CAS will use our new directory.

-

Run the following command (copy and paste in the Root Terminal window).

tee /root/edu_k3dv5/edu/site-config/edu/cas-manage-casdiskcache.yaml > /dev/null << EOF --- apiVersion: builtin kind: PatchTransformer metadata: name: cas-add-host-mount-casdiskcache patch: |- - op: add path: /spec/controllerTemplate/spec/volumes/- value: name: casdiskcache hostPath: path: /cdc-default type: Directory - op: add path: /spec/controllerTemplate/spec/containers/0/volumeMounts/- value: name: casdiskcache mountPath: /casdiskcache target: group: viya.sas.com kind: CASDeployment name: .* version: v1alpha1 --- apiVersion: builtin kind: PatchTransformer metadata: name: cas-add-environment-variables-casdiskcache patch: |- - op: add path: /spec/controllerTemplate/spec/containers/0/env/- value: name: CASENV_CAS_DISK_CACHE value: "/casdiskcache" target: group: viya.sas.com kind: CASDeployment name: .* version: v1alpha1 EOF

Note: Since we have used the viya4-deployment tool to deploy SAS Viya, there is no need to add a reference in the kustomization.yaml file, our new configuration file will be picked up automatically.

Redeploy with the configuration change

-

Copy and paste the following command in the Root Terminal window, to re-run the Viya deployment playbook (so the configuration changes will be picked up):

/root/edu_k3dv5/scripts/redeploy.sh -

It should take between 1 and 2 minutes to complete. Optionally, while the redeployment is running you can open OpenLens to look at potential changes in the Pods workload.

-

To see the pods in Lens, click the catalog icon

, select k3d-edu, then under Workload, click Pods.

, select k3d-edu, then under Workload, click Pods. -

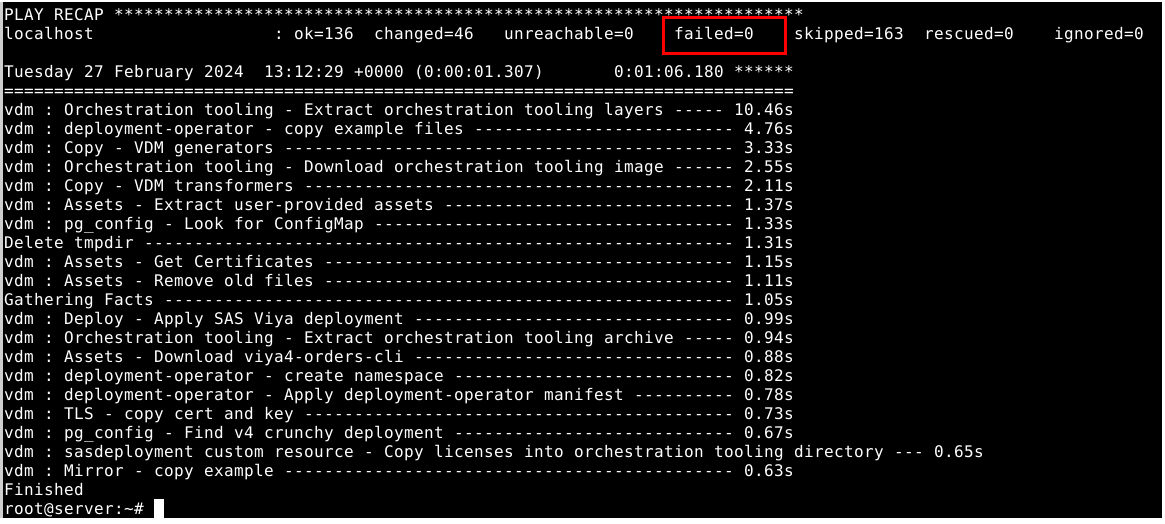

If the redeployment was successful you should see something like this in the Terminal window:

-

Now, since the deployment is managed by the SAS Deployment operator, you need to wait for the SAS Deployment operator’s reconcile job to complete.

-

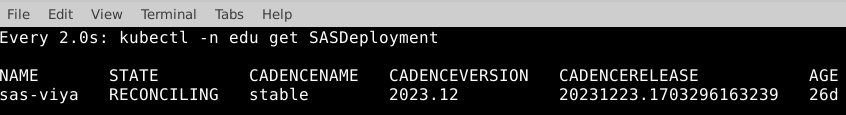

You can then, monitor the progress of the job by running the command below in the Root Terminal window:

watch 'kubectl -n edu get SASDeployment' -

You should see something like this:

-

The reconciling step should take around 5 minutes.

-

When you see that the SUCCEEDED for the STATE, type

<Ctrl+C>to exit the watch command. -

Now restart the CAS Server so that it is aware of the new CAS_DISK_CACHE configuration.

kubectl -n edu delete pod --selector="casoperator.sas.com/server==default"

Check the configuration changes and new location for CAS DISK CACHE

-

Check that the CAS Controller pod has a mounted volume pointing on “/cdc-default”.

- Run the following command (it will wait for the CAS Controller to have restarted before checking its configuration):

kubectl wait -n edu --for=condition=ready pod --selector="casoperator.sas.com/server==default" kubectl -n edu describe pod sas-cas-server-default-controller | grep casdiskcache -B 2 -A 5 -

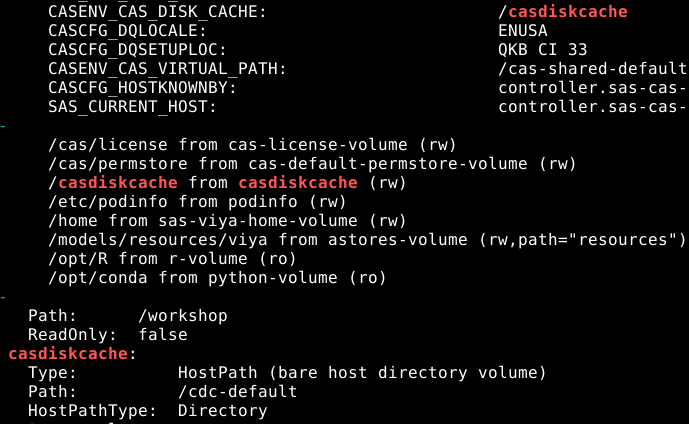

You should see something like this:

-

As you can see in the command’s output, the

CASENV_CAS_DISK_CACHEvariable is set to/casdiskcache(which is the path for the CAS Disk Cache inside the pod). This path, inside the pod, corresponds to thecasdiskcachevolume which is defined as anhostPathvolume type pointing on/cdc-defaulton the CAS physical node. -

Optional : check in SAS Environment manager if our new variable for the CAS Disk Cache has been set.

- Note : you need to wait for the CAS pod to be completly restarted before checking in SAS Environment Manager (it can take more than 7 minutes).

- Run the command below to watch the CAS controller restart progress.

watch "kubectl -n edu get pod sas-cas-server-default-controller"- In the SAS App, click the “hamburger” icon and select Manage Environment (or Click the SAS Environment Manager shortcut in the bookmarks tool bar of Google Chrome).

- If needed, login as student (the password is Metadata0) an choose Yes when asked to opt in to all of your assumable groups.

- Navigate to the Servers page, by clicking the Servers icon on the left hand side

.

.

- Right-click cas-shared-default and select the Configuration option.

- Navigate to the Nodes tab.

- Choose the Controller node, right-click it, and select the Runtime Environment option.

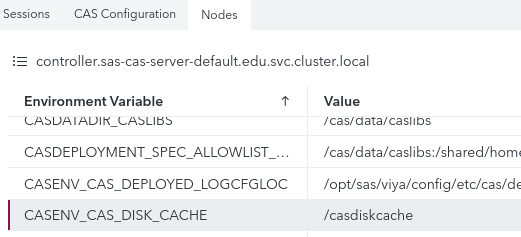

- Scroll through the Environment Variable table until you find the CAS_DISK_CACHE variable.

-

You should see this:

- You can now sign out, the configuration of a custom CAS Disk Cache location is completed.

What have we learned ?

-

Changing the default location of the temporary storage areas for CAS and Compute requires changes in the kustomize configuration and a redeployment.

-

However, it is strongly recommended to provision and configure sufficient and highly-performant storage for them. The default location and volume types for CAS Disk Cache and SASWORK (Node’s root directories with the “emptyDir” volume should never be used in production environments).

-

In this example, to keep things simple we have used the

hostPathvolume type. However this option may not always be acceptable for security reasons. Alternative options exist, such as the Kubernetes generic ephemeral volume (with dynamic provisioning) or with the Cloud provider’s specific storage capabilities (for example AKS ephemeral OS disks in Azure).

References

- Engineering CAS performance, Hardware Network, and Storage Considerations for CAS Servers

- Tuning the CAS Server

- Tune CAS_DISK_CACHE

- hands on / HO1 Enable Progressive App

- hands on / HO2 Allow more analytics jobs to run

- hands on / HO3 Create a pool of reusable compute servers

- hands on / HO4 Increase Compute pod CPU limit

- hands on / HO5 Tune temporary storage locations CAS DISK CACHE<-- you are here

- hands on / HO6 Tune temporary storage locations SASWORK